Simulation

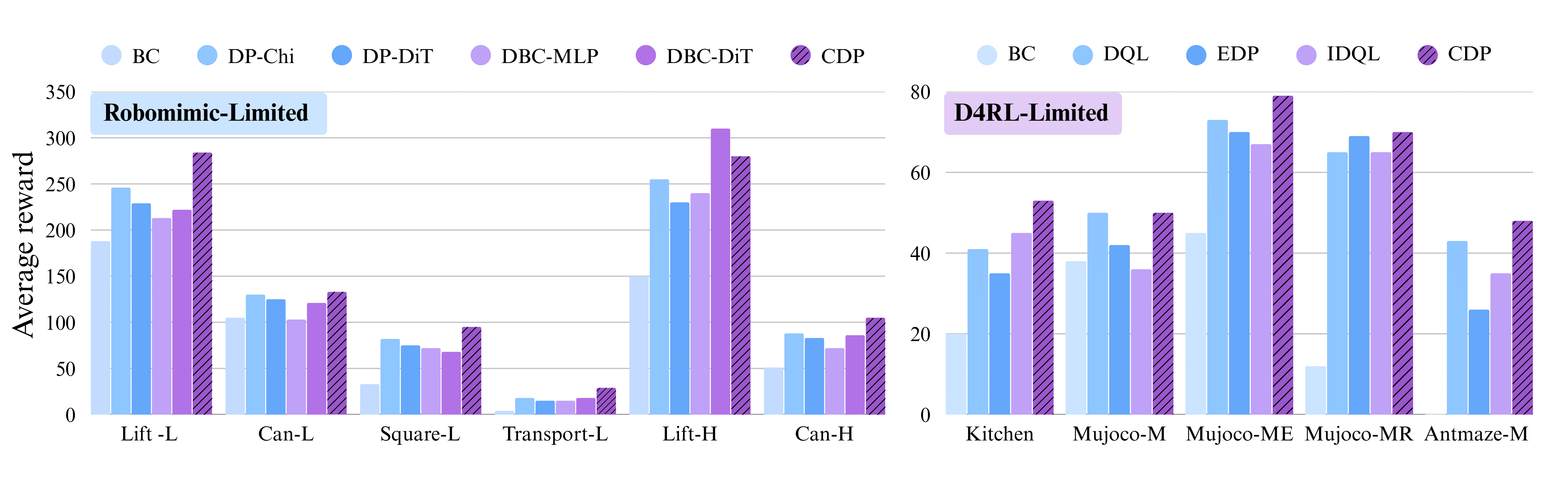

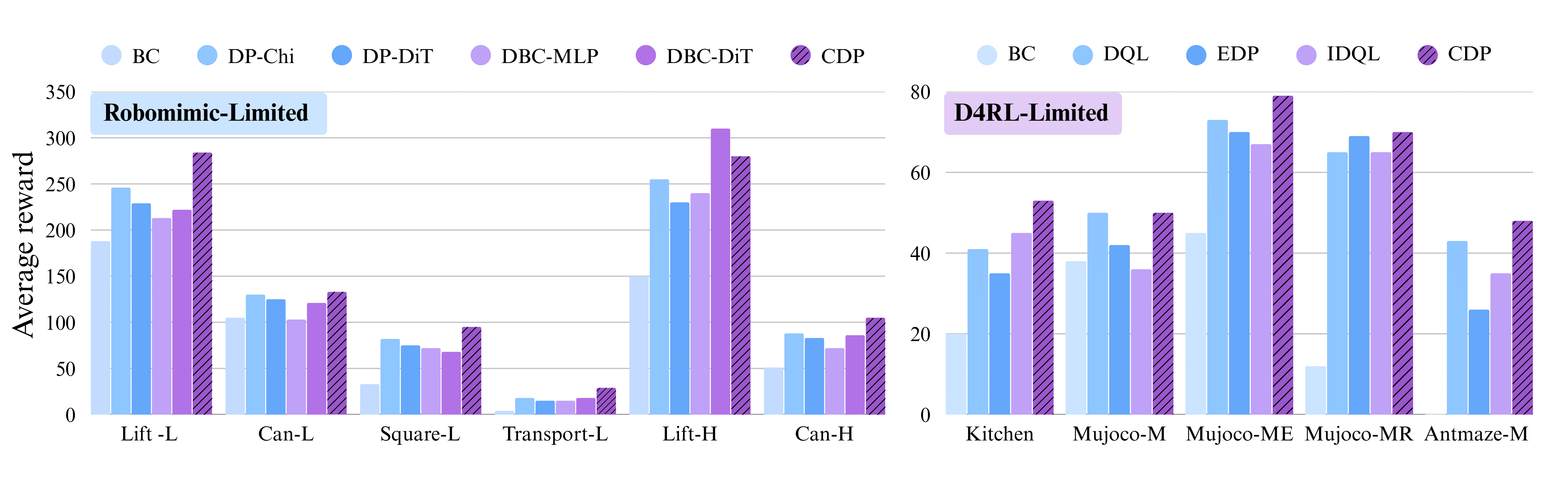

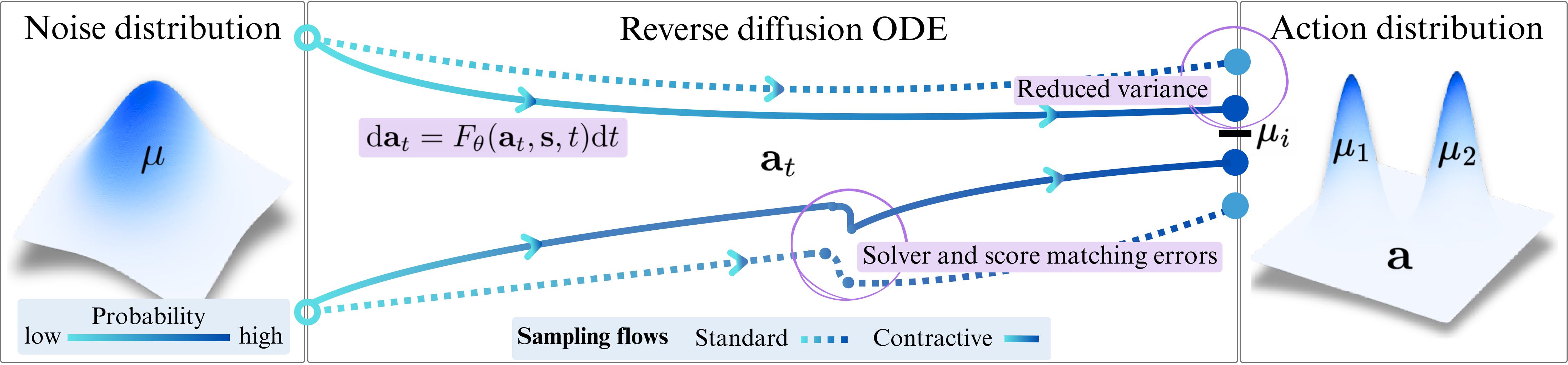

Diffusion policies have emerged as powerful generative models for offline policy learning, where their sampling process can be rigorously characterized by a score function guiding a stochastic differential equation (SDE). However, the same score-based SDE modeling that grants diffusion policies the flexibility to learn diverse behavior also incurs discretization errors, large data requirements, and inconsistencies in action generation. While not critical in image generation, these inaccuracies compound and lead to failure in continuous control settings. We introduce Contractive Diffusion Policies (CDPs) to induce contractive behavior in the sampling flows of diffusion SDEs. Contraction pulls nearby flows closer to enhance robustness against solver and score errors while mitigating unwanted action variance. We develop an in-depth theoretical analysis along with a practical implementation recipe to incorporate CDPs into existing diffusion policy architectures with minimal modification and negligible computational cost. Empirically, we evaluate CDPs for offline learning by conducting extensive experiments in simulation and real-world. Across benchmarks, CDPs often outperform base policies, with pronounced benefits under data scarcity.

Contractive Diffusion Policies (CDPs) enhance robustness by pulling noisy action trajectories closer together, mitigating solver and score errors while stabilizing learning.

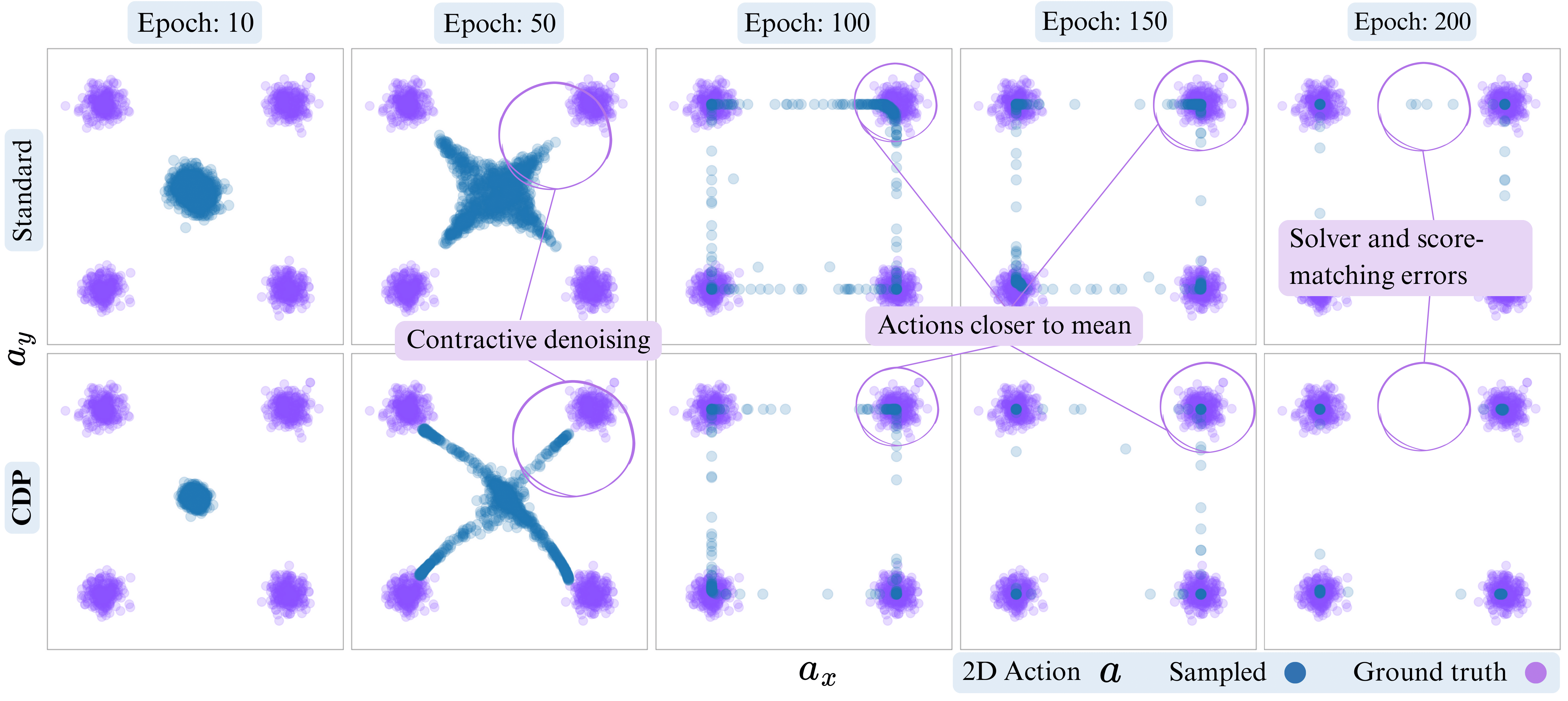

To take a closer look, we compare CDP against vanilla diffusion during training by sampling from the learned policy at each epoch. This illustrates how contraction reshapes the sampling process, concentrating actions near meaningful modes for improved accuracy.

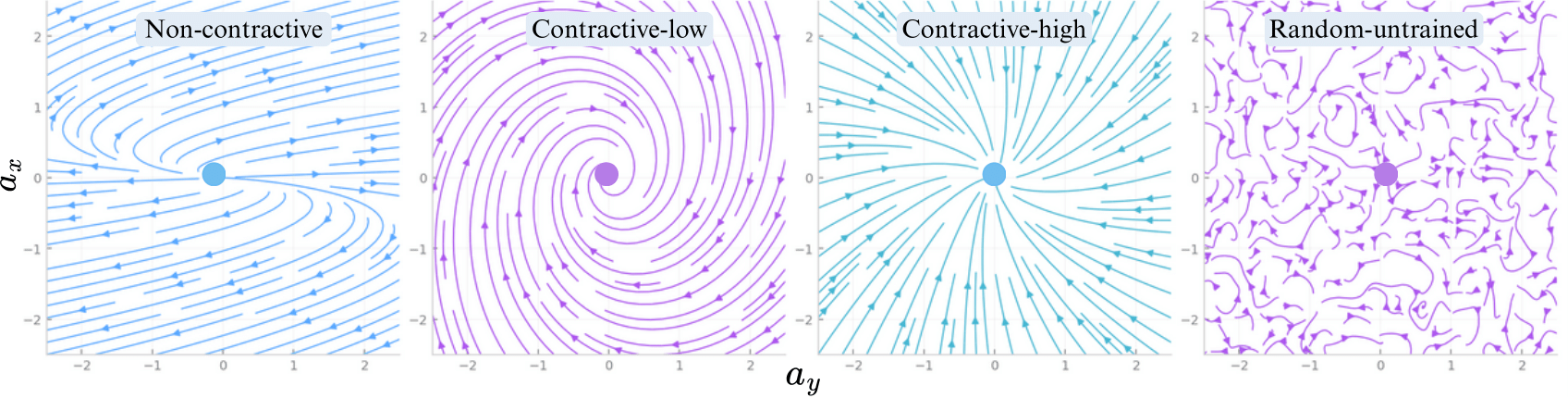

The following figure shows how contraction affects the generated flows in a toy ODE. Comparing these flows to one in the first figure, we observe why contraction proves useful in diffusion sampling.

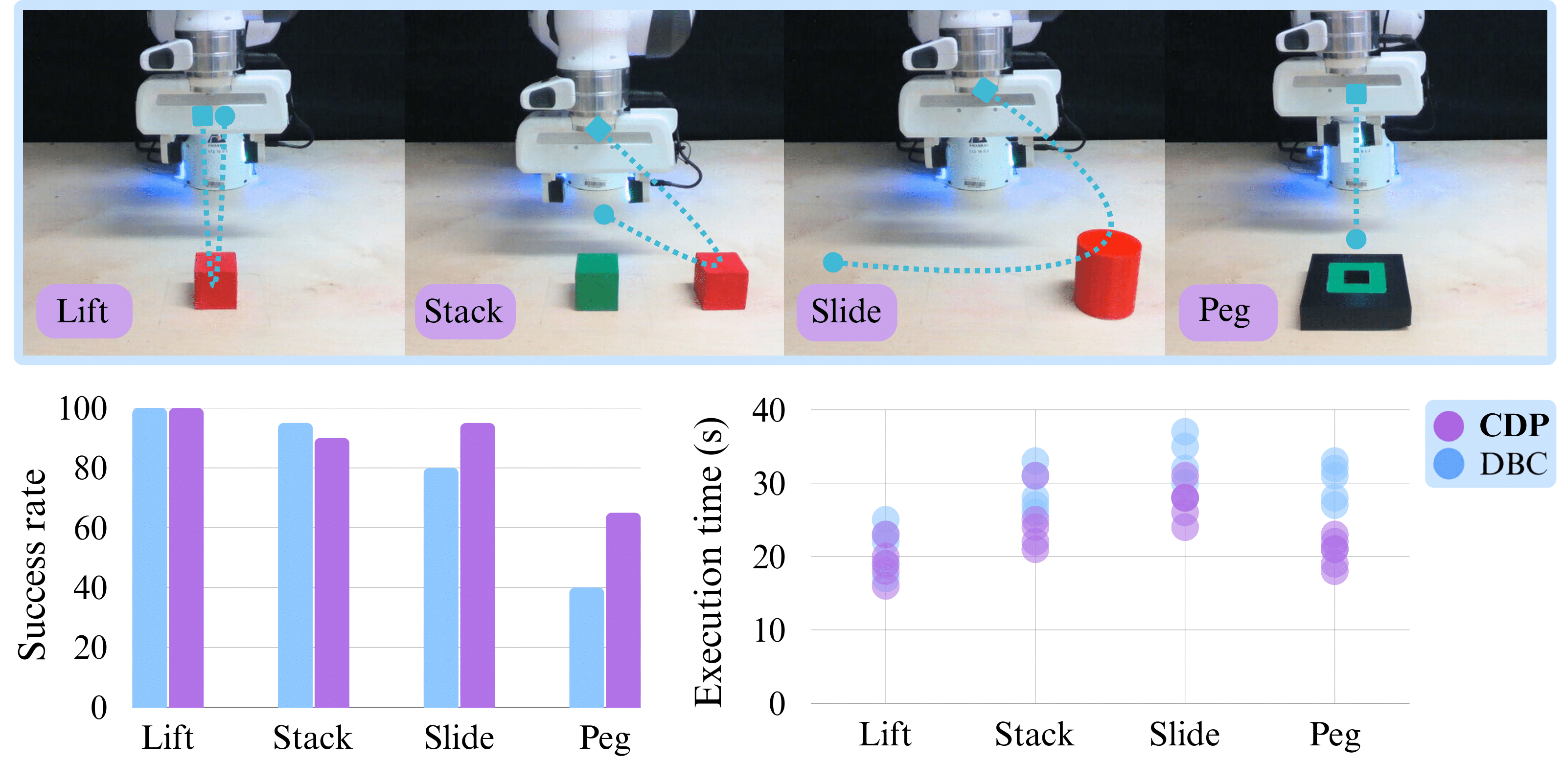

Video clips of real world and simulated (added soon) rollouts. CDP is mainly benchmarked in simulation, but we also deploy it on a real Franka robot arm to showcase its reliability in the real world.